AI Update: 2025 InfraRed Report

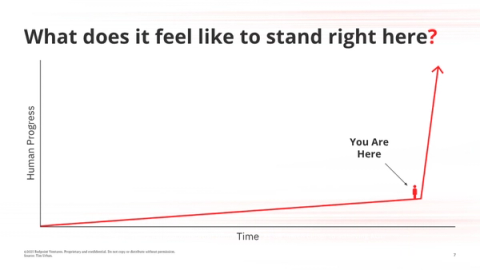

If you rewound the clock a couple of years, the above chart pretty much captures where we all were standing.

Over the last 2 years, we have seen incredible progress in technology driven by AI, and it somehow still feels like the best and most exciting progress is yet to come.

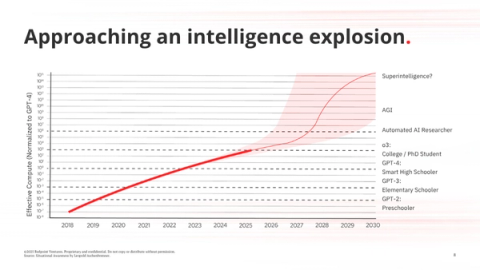

This is a chart from Leopold Aschenbrenner outlining the advancement of LLMs over the last 5 years, going from GPT models as smart as elementary school students to PhD level. In the next 5, we could unlock superintelligence systems, the likes of which we haven’t seen before.

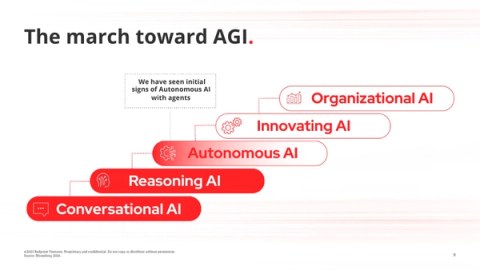

We continue to make tremendous progress building toward AGI. This is a framework published last year about how OpenAI sees the phases of AI evolving in the coming years.

If you think about it, we’ve made so much progress on this timeline in the last 12 months alone.

- Conversational AI we achieved with GPT 4 and AI chatbots like ChatGPT

- Reasoning AI we saw with the release of OpenAI o1 and continue to see exciting advances here

- Autonomous AI we’ve started to see with the release of agentic systems

And the next two phases, we could achieve even faster than we think as the adoption of AI has only accelerated.

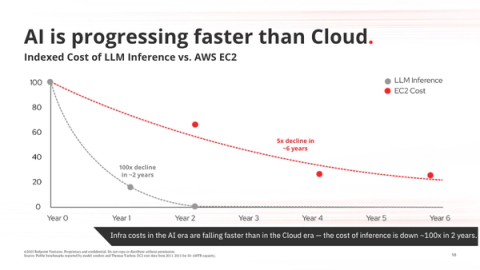

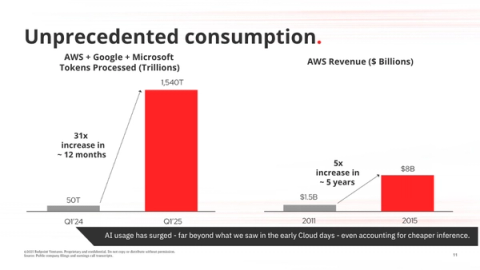

To put everything happening in perspective, the advances in AI have come faster than what we saw with Cloud.

The cost of running LLMs has come down faster than EC2 costs did in the early days of Cloud. LLM inference costs have dropped 100x in the last 2 years, whereas EC2 costs fell 5x in 6 years early in the Cloud era.

In parallel, the usage of LLMs has exploded, much faster than what we saw with AWS cloud consumption early on. AWS revenue grew 5x from 2011 to 2015, and while we don’t have overall revenue to compare on the AI side, we can show a comparison of overall consumption with a 31x growth in token usage that Microsoft and Google have seen in just the last 12 months.

So let’s absorb that. LLM inference costs have dropped an order of magnitude faster than EC2 did during the cloud computing wave. However, the actual consumption we’re witnessing for the AI wave is orders of magnitudes higher than consumption in the cloud.

It’s increasingly clear that AI is the next platform shift, and it’s exciting to see how quickly the transformation is happening.

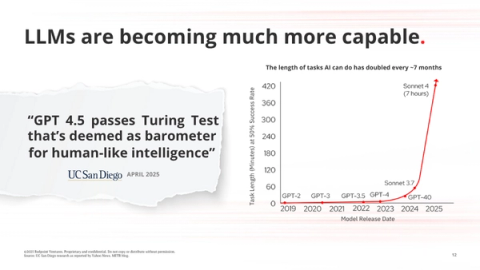

If we think about the most exciting developments in AI over the past year, first and foremost is how capable LLMs have become.

Researchers from UC San Diego demonstrated this Spring that GPT 4.5 has passed the Turing test, which we’ve long considered the benchmark for whether our models can act and operate like humans.

These models have also become capable of handling longer, more complex requests and tasks. The graph above shows the length of tasks LLMs can handle at 50% success rate, and the progress has been incredible.

With Claude 4 recently announced, we now have models that can run tasks up to 7 hours in the background. Just a couple years ago, these models couldn’t handle tasks longer than a minute.

These models continue to improve at such an impressive rate and are quickly approaching human-level capabilities.

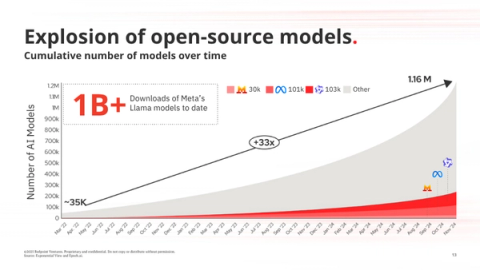

We’ve also seen an explosion of open source models. We now have over 1M total open-source models publicly available.

Meta recently announced their Llama models have been downloaded 1B times over the last couple years. The open-source ecosystem remains strong, and it’s exciting to see capable LLMs like those from Alibaba, Meta, and Mistral.

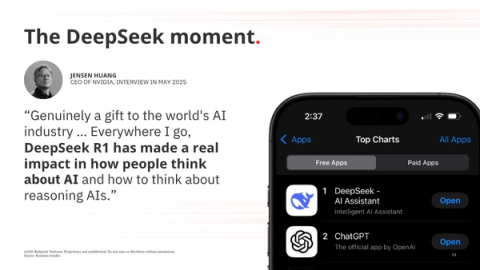

Of course, we saw the Deepseek release.

The tech community was shocked to see a cheaper, open-source SOTA reasoning model released by Deepseek somewhat out of the blue, but Deepseek successfully demonstrated an incredible breakthrough - a way to scale intelligent models through pure RL rather than expensive supervised fine tuning.

Even despite the immediate selloff in NVIDIA’s stock, Jensen himself has emphasized the impact Deepseek will have on the next phase of innovation in LLMs.

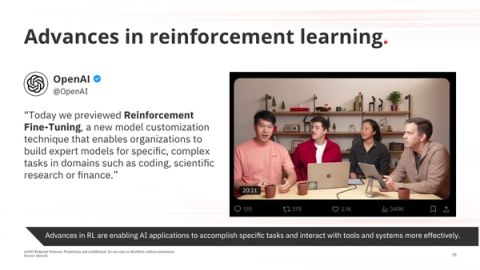

OpenAI has been working on this for some time, but they just released their RFT technique through their API enabling developers to tune models to handle domain-specific tasks.

We expect there to be continued advancements in RL techniques like this to make LLMs more powerful in production and do remarkable things.

The way this will all manifest is with agents.

Agents are perhaps the most exciting implication of these advances.

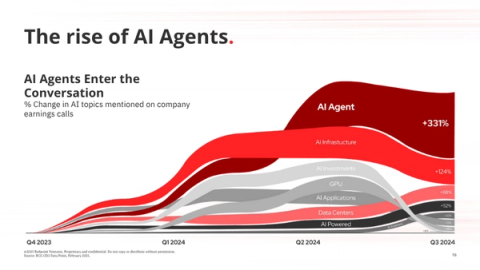

Recently we have seen an explosion in interest for AI agents. This data pulls topics related to AI that are mentioned on public company earnings calls. As you can see over the last couple quarters, there has been a massive uptick in the mentions of AI agents, in part due to some of the infra developments we’ve talked about that could make agents more feasible.

These agents are enabling builders to leverage LLMs to reimagine existing markets and create new ones.

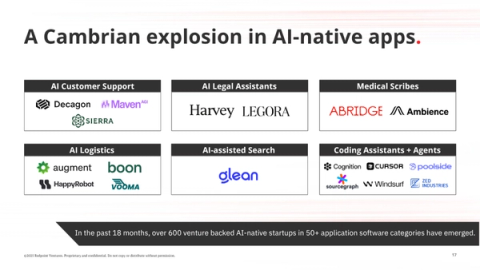

We’ve already had a cambrian explosion in AI apps - we’ve counted over 600 venture-backed AI-native startups in 50+ categories emerge In the past 18 months, with clear standalone winners like Harvey and Legora for legal assistants, Sierra and Decagon for customer support, and Abridge in healthcare.

We can’t wait to see what agents will do to further drive this explosion in apps forward, with areas like coding already being transformed through agents with companies like Cognition and Sourcegraph.

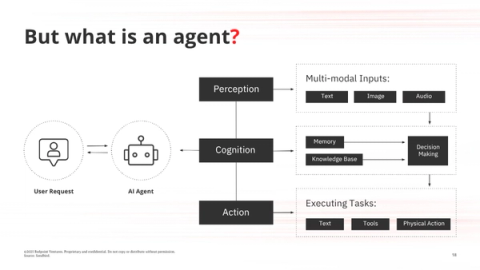

There hasn’t been a perfect definition of agents yet, but we think of agents as an autonomous system where an LLM decides which steps to take and/or tools to call.

You give an LLM access to tools and a knowledge base, and the agent uses that information to execute tasks, often with limited or no human instruction.

Despite all the infrastructure advances we’ve talked about, it’s not easy to build agents today.

The top challenges we hear about from engineering leaders today are output quality, cost, and latency.

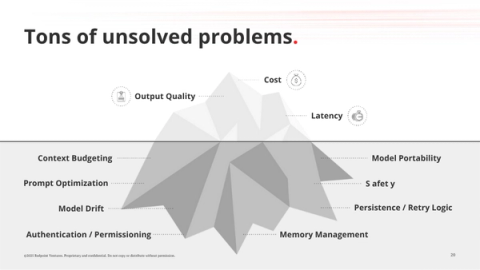

The reality is there are a number of granular issues that developers must consider when architecting their agentic systems.

These are some of the top issues we’ve heard about, such as:

- Context budgeting - what and how much information you give your model, and when

- Model drift - how will your performance change when the data or model input variables change

- And Authentication - how do we permission agents to ensure they can only access what they should have access to and for the appropriate session duration

All of these factors contribute to the overarching considerations of quality, cost, and latency, and it’s clear there are a lot of problems that AI developers face in the weeds today.

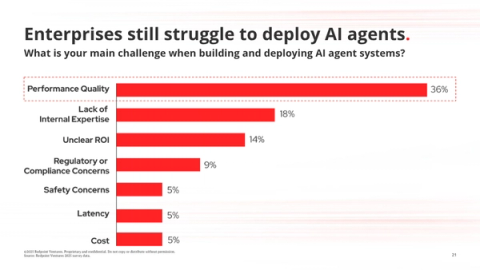

We surveyed dozens of technology leaders and heard similar difficulties when deploying AI agents.

Performance quality was the number 1 challenge identified - these systems are powerful and dynamic, but hard to control.

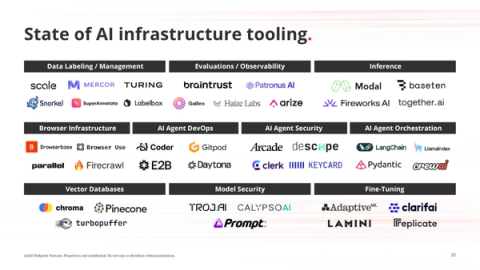

We think this presents a massive opportunity for new AI infra tooling to help support AI application and agent development.

The need to streamline how we build AI applications is only becoming more important as the underlying models allow for handling more interesting workflows, and it’s exciting to see a robust startup ecosystem like this.

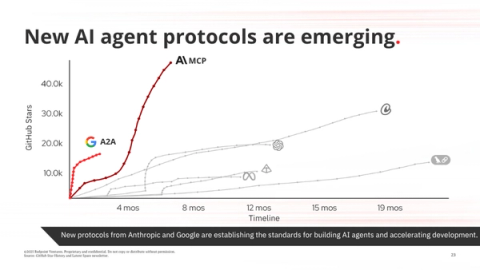

Another exciting development is the emergence of open protocols like Anthropic’s MCP and Google’s A2A. Adoption for both of these has been staggering.

Anthropic released the Model Context Protocol at the end of 2024, and in just 6 months, it surpassed 40K stars on GitHub as more teams spun up their own MCP servers.

It really does seem like MCP is becoming the prevalent standard, and it makes sense why. MCP solves the problem of developers writing a bunch of custom glue code to allow models to easily connect to various data sources and tools.

We think protocols like this are going to help standardize how agentic systems are built and accelerate deployments across the board.

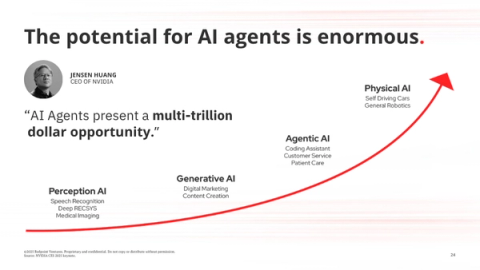

We cannot overestimate the impact AI agents will have.

As Jensen said, this is a multi-trillion dollar opportunity.

AI agents have the potential to drastically change our lives. Not just in enterprise settings to automate labor-intensive services like coding or customer support, but also in the physical world with autonomous vehicles and robotics.

These are all different types of agentic systems, and having a mature AI infrastructure ecosystem is going to be necessary to enable deployments at scale.

We are still in the very early days of agents, and we’re very excited about the opportunity ahead.

Read the full InfraRed Report here.